I have recently purchased a new server from SYS (an OVH subcompany), but, after the server was activated, there was no option available for installing Esxi. It seems like they decided to remove it completely from their SYS lineup. There was also no option for installing a modern windows server (only Windows Server 2008 was available), so hyper v was no an option. I could use proxmox or xenserver but, as you might know, veeam doesn't support other os than esxi and hyperv. Changing from Veeam to something different, such as Acronis was not an option. I don't have enough time.

I started looking on the web about how to install Esxi from the rescue mode.. but nothing found, so I decied to write a guide about it (if I was able to install it, of course).

Installing ESXI from rescue mode

First of all you need to boot the server in rescue mode, just go to your sys control panel and select boot options -> rescue mode. Note that, in order to login to the resuce mode, you need to add an SSH key to your account.

You will also need to have an Esxi ISO. You can download it from the vmware website.

Once the rescue mode booted, login as root and type this command:

kvm -machine pc-i440fx-2.1 \

-cpu host \

-smp cpus=2 \

-m 4096 \

-hda /dev/sda\

-cdrom VMware-VMvisor-Installer-6.5.0-4564106.x86_64.iso \

-vnc :1 \

-net nic -net user,hostfwd=tcp::80-:80,hostfwd=tcp::443-:443,hostfwd=tcp::2222-:22

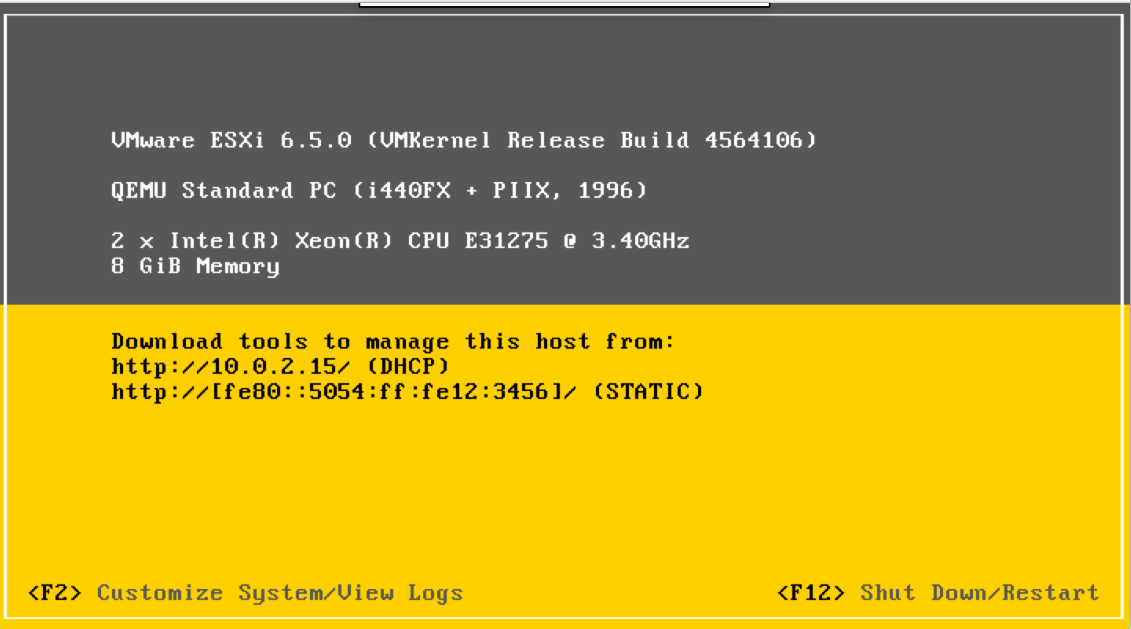

Replace the path of the iso with the actual path of the esxi image you have download. I also tried installing esxi 6.7 but it didn't booted up, maybe beacuse of a driver issue. Feel free to try, maybe it will work.

This command will start a VM using the first drive (/dev/sda) as main drive (forwarding also port 80, 443 and 2222->22 on the host network).

You can now use any VNC-capable app to connect to the remote vm. Connect to the port :5901 and install Esxi.

Once the install is completed reboot the vm and enable SSH remote access (inside esxi), you will need to connect to it to configure the host network.

I changed this settings from the esx.cfg file:

/adv/Net/ManagementAddr = "<server public ip>"

/adv/Net/ManagementIface = "vmk0"

/adv/Misc/HostIPAddr = "<server public ip>"

/adv/Misc/HostName = "<server hostname>"

/net/pnic/child[0000]/name = "vmnic0"

/net/pnic/child[0000]/mac = "<server mac address>"

/net/routes/kernel/child[0000]/gateway = "<server public ip with last number changed to 254>"

/net/vmkernelnic/child[0000]/name = "vmk0"

/net/vmkernelnic/child[0000]/enable = "true"

/net/vmkernelnic/child[0000]/tags/1 = "true"

/net/vmkernelnic/child[0000]/ipv4broadcast = "<server public ip with last number changed to 255>"

/net/vmkernelnic/child[0000]/dhcpv6 = "false"

/net/vmkernelnic/child[0000]/dhcpDns = "false"

/net/vmkernelnic/child[0000]/netstackInstance = "defaultTcpipStack"

/net/vmkernelnic/child[0000]/dhcp = "false"

/net/vmkernelnic/child[0000]/mac = "<server mac address>"

/net/vmkernelnic/child[0000]/routAdv = "false"

/net/vmkernelnic/child[0000]/ipv4netmask = "255.255.255.0"

/net/vmkernelnic/child[0000]/portgroup = "Management Network"

/net/vmkernelnic/child[0000]/ipv4address = "<server public ip>"

/vsan/faultDomainName = ""

/vsan/faultDomainVersion = "2"

/vmkdevmgr/logical/pci#p0000:00:1f.2#0/alias = "vmhba0"

/vmkdevmgr/pci/p0000:03:00.0/alias = "vmnic0"

/vmkdevmgr/pci/p0000:00:1f.2/alias = "vmhba0"

Now shutdown the vm and reboot the server booting from the local hard drive. If everything is fine in a few minutes you should be able to ping your server. If you can't ping the server reboot to rescue mode, restart the vm (also without the -cdrom flag, you don't need it anymore) and check the logs. You should find why the network start didn't work.

Feel free to ask me more details sending me an email at andrea@ruggiero.top.